Perry Wilson: AI in Medicine is suffering from what I would like to call “The Cassandra Problem”

Quoting Perry Wilson, Director, Clinical and Translational Research Accelerator at Yale University, on X/Twitter:

“AI in Medicine is suffering from what I would like to call ‘The Cassandra Problem’.

I am thinking about this issue this week thanks to this study, appearing in JAMA Network Open, which examines the utility of a model that predicts blood clots in hospitalized kids.

The model takes in a bunch of data from the electronic health record and makes what turns out to be a pretty accurate prediction about the risk of blood clots (AUC~0.90).

But accuracy is just table stakes for machine learning models. It’s not enough. Enter Cassandra.

Cassandra was a priestess of Troy and daughter of King Priam. Apollo had given her the gift of perfect prophecy – everything she foresaw would come to pass. But she was cursed that no one would ever believe her.

She told her brother Paris, for example, not to go to Sparta to abduct Helen. Doing so, she said, would lead to the fall of Troy. He ignored her, of course, and you know the rest of the story.

The point is – even a perfect prediction is useless if no one does anything about it. Accuracy is table stakes for ML in Medicine. It is not enough. The JAMA Network Open study does the right thing, randomizing the use of the model vs. usual care.

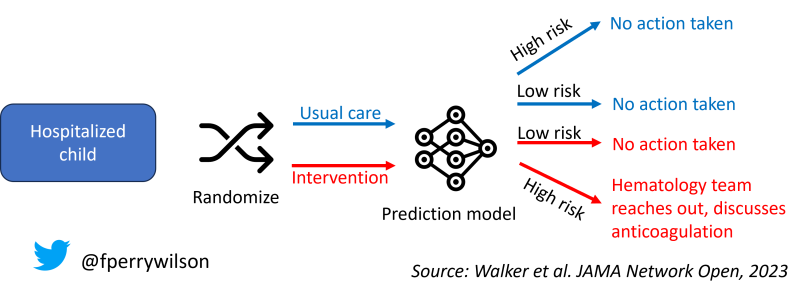

Kids were randomized to use the model or not. In the intervention group, model-predicted high risk kids would lead to a hematology consult to discuss anticoagulation with the primary team.

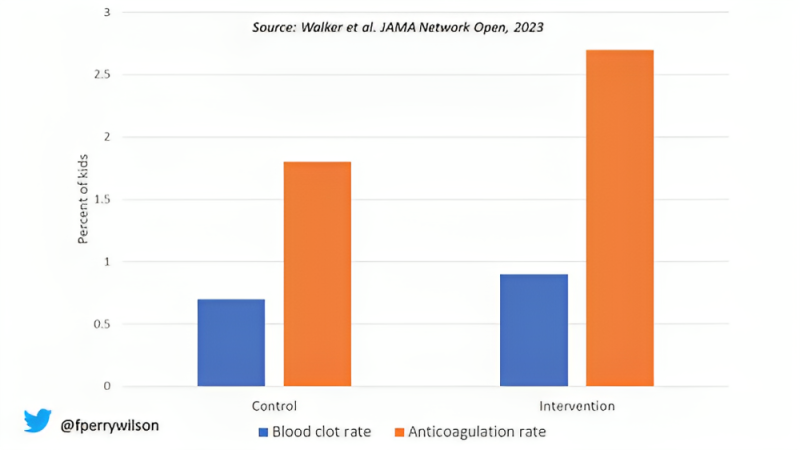

And the model was good! Of 135 kids who developed blood clots, the model had flagged 121 in advance. So, clearly, the use of the model would reduce blood clots, right? Wrong. Blood clot rate did not significantly differ between the usual care and intervention group. Why?

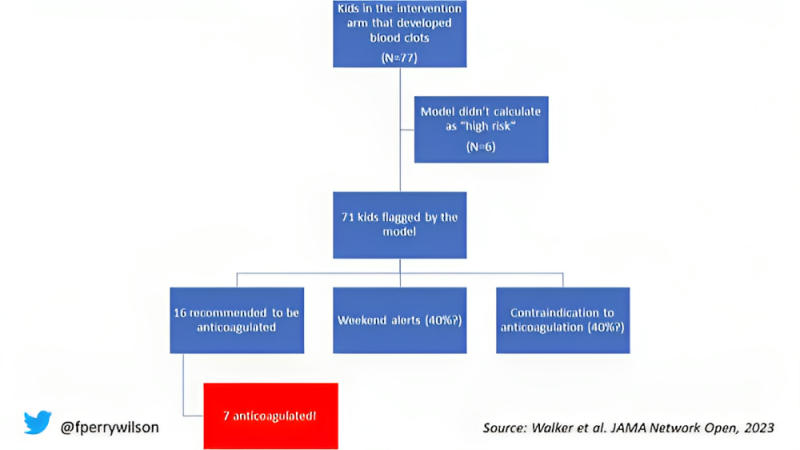

Well, let’s look at the 77 kids in the intervention group that developed blood clots, because they tell us the story of why accurate models fail in clinical practice. Of those 77, the model failed to flag 6. That’s issue #1: accuracy. Table stakes.

Of the remaining 71, a bunch got flagged on the weekend when the hematology team wasn’t around to provide recs to the treatment team. Issue #2: good predictions don’t work if you don’t tell people about it.

A bunch of the kids who were predicted to be at risk of clot (and got a clot!) had contraindications to anticoagulation. Issue #3: good predictions don’t work if you can’t do anything about it.

Of the 16 remaining kids for whom heme recommended anticoagulation, only 7 GOT anticoagulation. Issue #4: good predictions don’t work if people CHOOSE not to do anything about it.

This is the gulf that AI models need to bridge now. The arms race of higher accuracy is an illusion. We need to move to implementation.

So the next time an AI company with a slick slide deck shows you how accurate their model is, ask them if accuracy is the thing that matters most. If they say “yes, of course!”. Then tell them about Cassandra.

More on this theme in my Medscape column this week.”

Source: Perry Wilson/Twitter

-

Challenging the Status Quo in Colorectal Cancer 2024

December 6-8, 2024

-

ESMO 2024 Congress

September 13-17, 2024

-

ASCO Annual Meeting

May 30 - June 4, 2024

-

Yvonne Award 2024

May 31, 2024

-

OncoThon 2024, Online

Feb. 15, 2024

-

Global Summit on War & Cancer 2023, Online

Dec. 14-16, 2023