Roupen Odabashian, Hematology/Oncology Fellow at Karmanos Cancer Institute, and Podcast Host at OncoDaily, shared a post on LinkedIn:

“I am a big believer that AI is here to augment and improve the quality of care, and it should be a companion to support physicians.

I have recently been using Doximity’s DoxGPT, and I wanted to share my experience on a weekend inpatient service with ~30 patients ==> aka the real test.

In this article, I share my experience.

What feels different with DoxGPT:

- Doximity has created a very fast AI CDS tool that really makes a difference especailly when rounding inpatient and you need answers quickly.

- I love the formatting and the way the AI displays the answers. Bullet points and lots of white space between paragraphs. Easy to read and digest

- You can access drug info in a side panel instead of starting a new chat

and many more

In this article, I summarize my experience using it.

Happy to hear your thoughts if you used it, and what are the things you like and dislike.

How Doximity GPT Earned a Spot in My Daily Oncology Workflow!

Look, I’m skeptical of new tools. But also, I am a very early adopter and happy to grow with the technology. So when I first used Doximity’s DoxGPT last year, I filed it under its cool but I felt it wasn’t differentiated and went back to my usual routine of using other clinical desicion support tools.

But here’s the thing: I heard there have been updates recently, so I wanted to give it a try. There is no better way to try a tool than a busy weekend in the hospital with 30 patients to round on.

The Real Test Setting: A Weekend on Service

Let me paint you a picture. It’s 6:45 AM, pre-rounds on a busy inpatient oncology service. I’ve got 30 patients on my list, two new consults from overnight, and a nurse is texting me about a concerning overnight scan.

What Actually Makes It Different

1. Speed That Actually Matters

Two seconds for a response. Consistently. This is different from many current CDS tools, where you have to wait 4-5 seconds for an answer.

You might think, “What’s the difference between two seconds and five seconds?” but when you’re on rounds with 30 patients and a noon conference, those seconds compound. More importantly, when a tool is fast enough, you stop hesitating to use it. I don’t think, “Is this question worth the wait?” I just ask.

That psychological shift is huge. It turns the tool from something I use deliberately into something I use reflexively.

2. The Formatting That Respects My Time

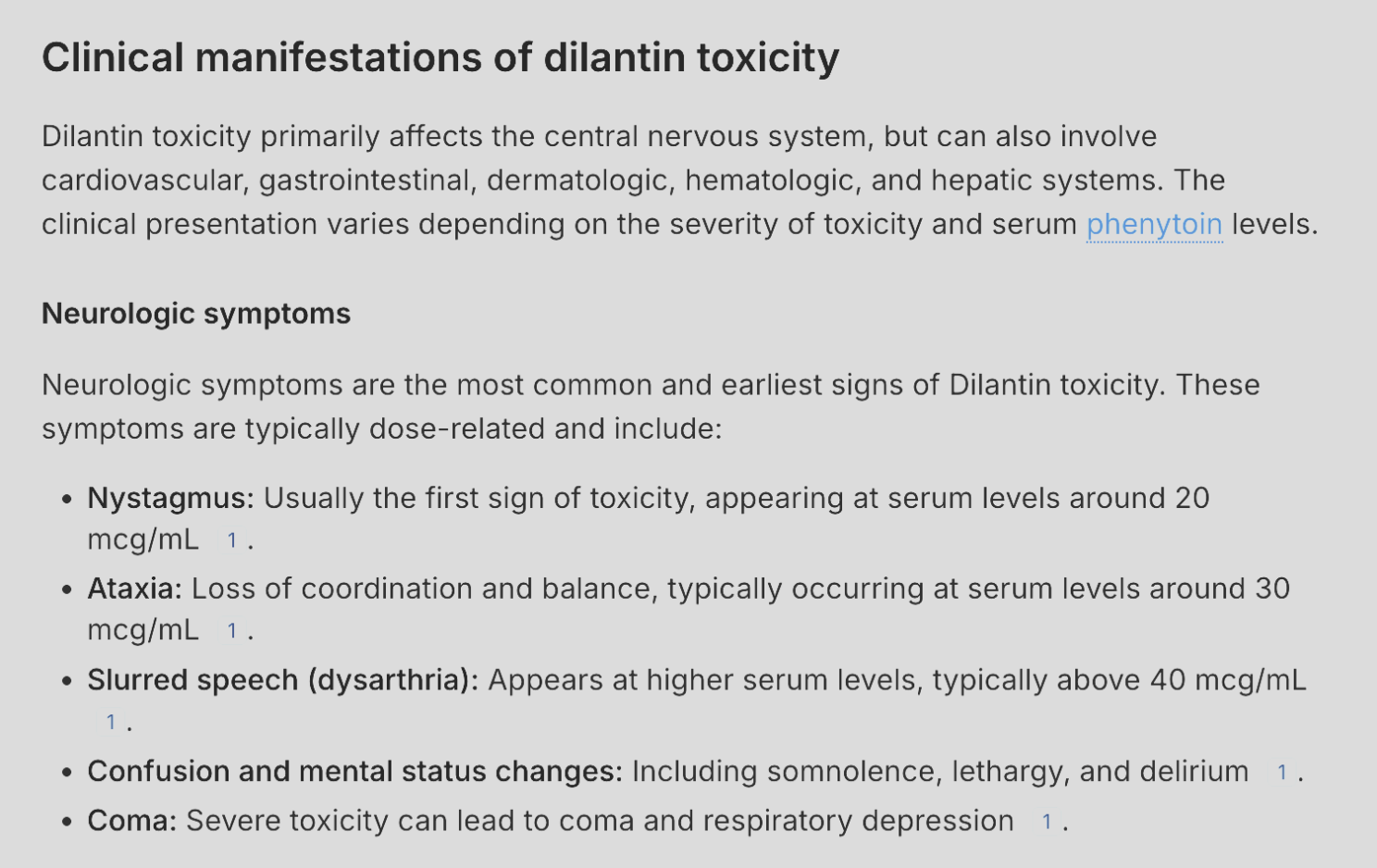

Answers come back in bullets and tables. Not walls of text. Not paragraphs I have to parse under time pressure.

This makes a bigger difference than you’d think. When I’m building a differential or reviewing management options, I can scan the response in five seconds and extract what I need. That’s time saved, but it’s also cognitive load saved – and in a field as dense as oncology, cognitive load is the real bottleneck.

I have used other CDS tools, and I was frustrated by the answers that were always in a narrative way in paragraphs, which, for me at least, was harder to read when compared to bullet-point paragraphs.

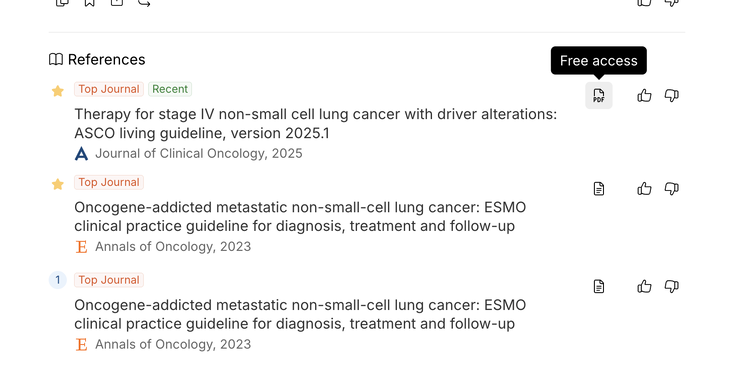

3. Full-Text Access When It Matters

When DoxGPT cites a paper, there’s often a small PDF icon next to it. Click it, and you can download the full-text article. No paywall. No institutional login loop. Just the paper.

There’s a limit, currently five downloads per month. Usually, I access papers through Institutional academic access, but this way, you can access up to five papers, which saves some extra clicks and windows. This can become handy especially if you are a researcher and need access to original articles.

4. Drug Information When You Actually Need It

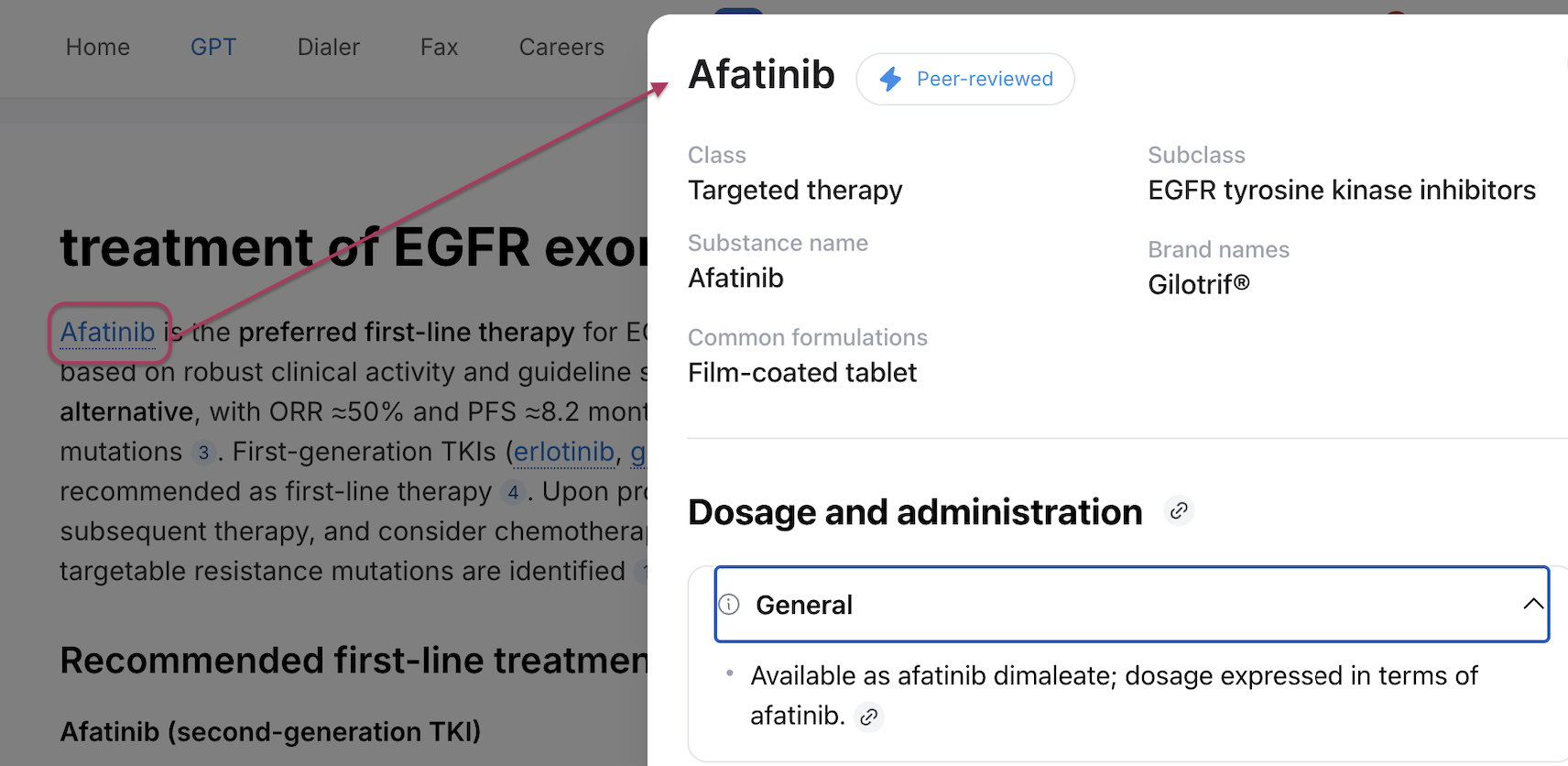

Here’s where it gets interesting. In the provided explanation, where you see the answer you can click on a medication name and you will have information about that drug in the same window in a side panel without having to open a new window or chat.

And it’s not the package insert. It’s a clinician-friendly summary that includes:

- Brand and generic names

- Indication-specific dosing (this is key—not just “the dose,” but the dose for each indication)

- Administration details

- Renal and hepatic adjustments

5. Projects for the Research Side

Full transparency: I haven’t fully integrated this yet, but the Projects feature is compelling.

Similar to ChatGPT or Claude, you can create your own projects in Doximity. It’s basically a workspace where you can collect papers, write notes, draft manuscripts, and iterate, all in one place.

My plan is to set up projects for my active manuscripts and quality improvement initiatives. Drop in the key PDFs, outline in the chat, draft sections, refine based on feedback. For clinician-researchers who are constantly switching between Zotero, Google Docs, and their email inbox, having the literature review and writing loop in one environment could be a real workflow upgrade.

I’ll report back once I’ve stress-tested it on an actual paper.

6. It Has a Huge Window! Not to Look Outside, but to Handle Long Notes

In my workflow, I use AI Scribe to draft notes, and I don’t use any patient-identifying information or keep any recordings. Therefore, the AI Scribe I use provides me with a note without any PHI. I don’t use Doximity AI Scribe yet, but DoxGPT has become an integral part of my workflow.

After discussing the impression and plan with the patient, and telling the AI scribe about my plan, the AI Scribe generates a note. I then copy and paste the note as is into DoxGPT.

Given the large window size, I can paste as much as I can, which is what makes Doximity unique compared to other CDSs.

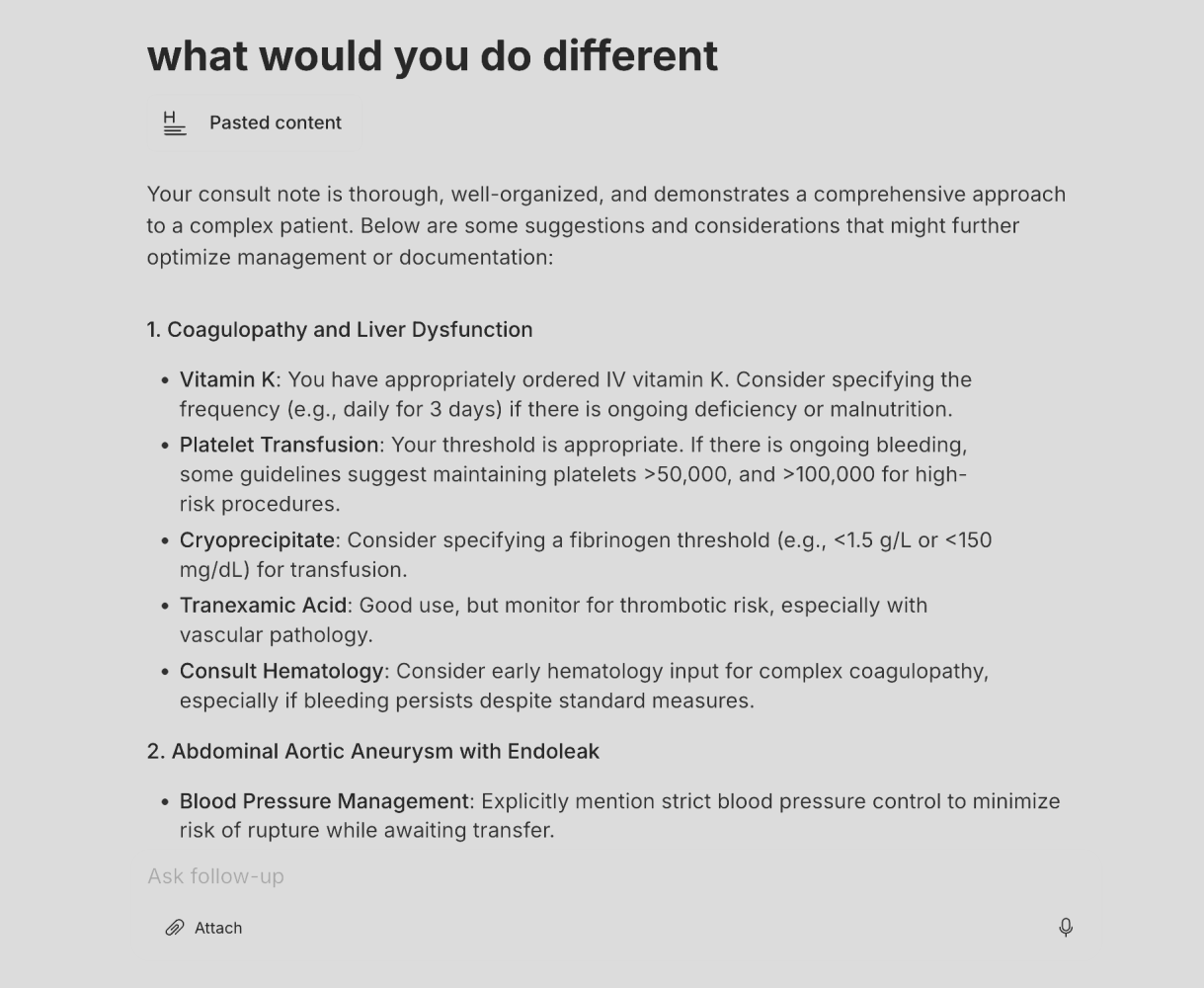

I provide a simple prompt to DoxGPT, asking a straightforward one-line prompt, such as “What would you do differently?”

As you can see below, I was asked to help with a patient with liver disease who had a higher tendency to bleed due to their liver disease. I suggested appropriate treatment for the patient, but there was room for improvement. My note lacked thresholds and durations of drugs and investigations, which I believe DoxGPT effectively guided me in addressing. On the other hand, I had never encountered thrombosis with tranexamic acid and thought the risk was theoretical. Although DoxGPT suggested it, I didn’t include it in my note.

Additionally, DoxGPT provided me with valuable insights into problems that I don’t manage daily, such as abdominal aortic aneurysms. Here is where AI becomes integral part of practicing medicine. It improves the quality of care and accuracy of care I provide. I came up with the plan, I saw the patient, I explained the plan to the patient, then the AI was there so say, hey! Maybe we can consider these things to IMPROVE your note or decision.

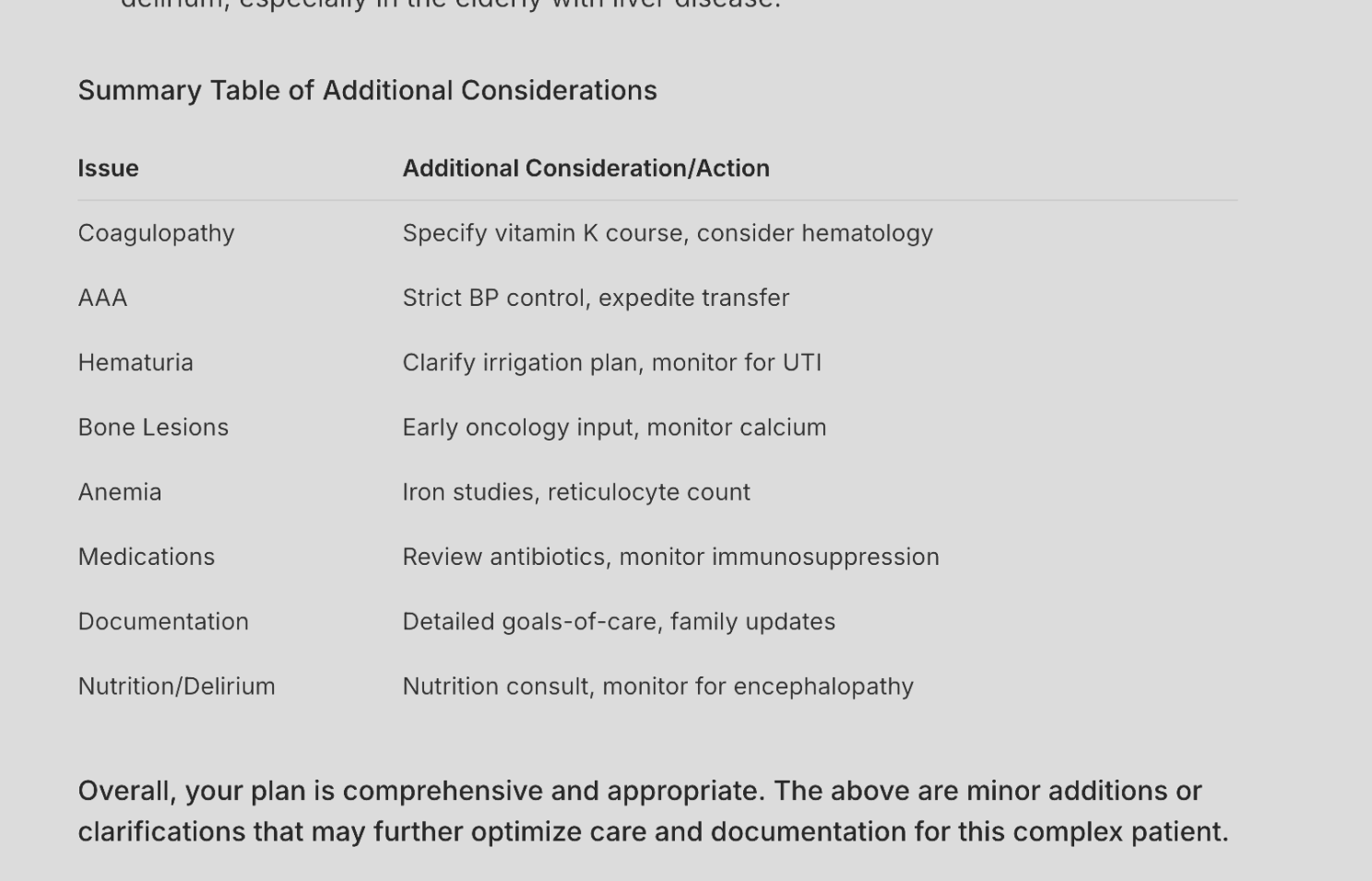

And then it continues to give me recommendations about other things, some of them are valid, and others I didn’t think fit that specific patient, but it might be appropriate in another situation, and at the end it organized in a nice table.

That massive window size is something I genuinely appreciate because in today’s digital age, clinical decision support should be seamlessly integrated into our workflow and always available, even when we don’t explicitly ask a question. However, DoxGPT is not fully integrated into my EMR. Despite this, I only asked ONE question about my patient, and I received satisfactory results.

How This Fits Into My Week

Let me get concrete about when I actually use this:

Clinic quick-checks: Patients ask about drugs they read online or ask me hard questions I don’t know the answer to. Or, if I encounter a situation and I can’t recall the best treatment for a specific situation, or if I’m doing a quick search on a new drug I’ve heard about, I type the name, pull up the monograph, and within seconds, I have an answer. This is a great tool if you need very fast reliable answers.

Board preparation and CME: I’m studying for my recertification. I solve many multiple-choice questions from various sources. Sometimes, these MCQs are not well written and lack explanation. DoxGPT can help by providing good answers and different insights, and ways of solving clinical questions

Double-checking my management: Lots of people care about AI accuracy. I care more about how AI can augment physicians. You can’t really expect 100% accuracy from large language models; it doesn’t make sense. Large language models are probabilistic tools. They depend on how a given word will affect the probability of subsequent words. So you cannot achieve 100% accuracy, but these tools can augment your thinking process, and they can show you so many things that you didn’t see without them. I use Doximity and HeidiAI scribe together to help me write my notes and augment my clinical decision support tool, and I found it a very interesting way of doing this, which I’m gonna post about in the next couple of weeks, so stay tuned.

Doximity has been a very integral part of augmenting my clinical skills in a way that I didn’t think was possible, especially on the days that I’m very tired by the end of the day, after seeing, like, 30 patients and rounding in the hospital. AI really can be there to make sure you provide the best care for your patient and you don’t commit mistakes.

Disclosure: I have no financial relationship with Doximity. I don’t get paid for this. I’m not an advisor or consultant. I’m just a practicing oncologist sharing what’s working in my real clinical life. If it stops working, I’ll stop using it. So far, it hasn’t.”

More posts featuring Roupen Odabashian on OncoDaily.