Olivier Elemento: Accelerating Innovation with Safe and Effective Guardrails

Olivier Elemento, Director of the Englander Institute for Precision Medicine at Weill Cornell Medicine, shared a post on LinkedIn:

“AI Governance: The Accelerator, Not the Anchor

Many view AI governance as bureaucracy that stifles innovation. I see it differently. Hospitals already have robust quality and safety frameworks, but AI introduces unique failure modes that demand a tailored approach. Performance can drift post-deployment, models can degrade across different sites, and using proxies like ‘cost’ for ‘need’ can bake in systemic bias, as seen in the landmark Optum algorithm study published in Science.

The goal of effective governance is to build guardrails that enable you to move faster and safer. A strong framework prevents rework, public stumbles, and regulatory surprises.

Here’s a practical, lightweight approach to doing it right:

Integrate, Don’t Duplicate: Instead of creating a new silo, embed an AI review docket into your existing committees. The NIST AI Risk Management Framework provides an excellent, lightweight backbone for this, structuring your efforts around core functions like Govern, Map, and Manage.

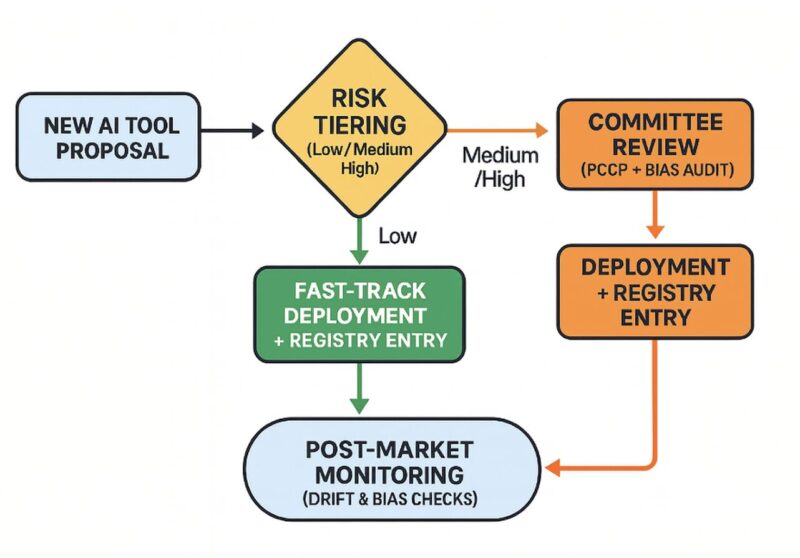

Use Risk Tiers: Not all AI is created equal. Fast-track low-risk tools and apply more rigorous review only for high-risk clinical decision support, aligning with frameworks like the EU AI Act.

Maintain a Lean AI Registry: A simple, one-page summary per model should list its owner, intended use, validation data (including subgroup performance), monitoring plan, and the date of its last drift check.

Plan for Change: The U.S. Food and Drug Administration’s concept of a Predetermined Change Control Plan (PCCP) is a game-changer. It lets you define acceptable model updates in advance so your teams can iterate without restarting the entire compliance process.

Document Human Oversight: Define exactly where and how clinicians can override an AI’s recommendation, what gets logged, and who is accountable. This principle of ensuring meaningful human control is a central pillar of the World Health Organization’s (WHO) guidance on AI ethics.

Monitor in the Wild: The literature on real-world model performance, like the independent audits of sepsis models in JAMA, shows us that validation is not a one-time event. Mandate independent evaluation before go-live, followed by ongoing automated checks for performance drift and bias.

I think framing governance as a performance enabler, rather than a compliance chore, is the key to getting it right. By tracking metrics like time-to-production and the impact of deployed models, governance is forced to prove its value.”

Title: Dissecting racial bias in an algorithm used to manage the health of populations

Authors: Ziad Obermeyer, Brian Powers, Christine Vogeli, Sendhil Mullainathan

More posts featuring Olivier Elemento on OncoDaily.

-

Challenging the Status Quo in Colorectal Cancer 2024

December 6-8, 2024

-

ESMO 2024 Congress

September 13-17, 2024

-

ASCO Annual Meeting

May 30 - June 4, 2024

-

Yvonne Award 2024

May 31, 2024

-

OncoThon 2024, Online

Feb. 15, 2024

-

Global Summit on War & Cancer 2023, Online

Dec. 14-16, 2023